Serverless architecture is a method of developing and deploying applications and services without needing to manage infrastructure. Developers can focus on their main product instead of worrying about managing and operating servers or runtimes in the cloud or on-premises by employing a serverless architecture.

One such type of workload perfectly suited for serverless is file processing, particularly complex workflows involving various transformations and analyses which is discussed in this post.

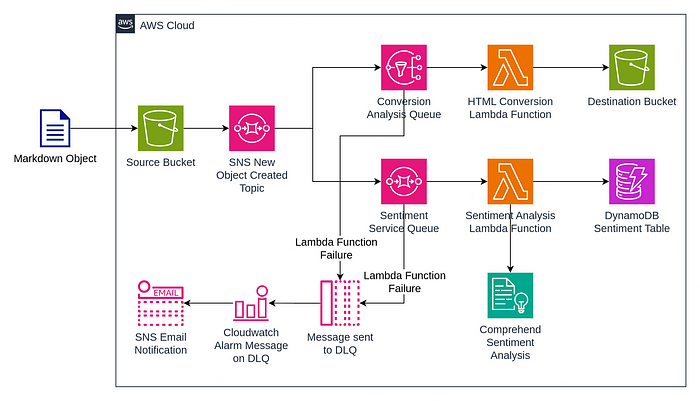

Serverless Real-time File Processing Architecture

The Real-time File Processing architecture is a general-purpose, event-driven, parallel data processing architecture that uses AWS Lambda. This architecture is ideal for workloads that need more than one data derivative of an object.

In this example, we convert interview notes in Markdown format to HTML and analyze sentiment, employing S3 events to trigger parallel processing flows.

Architecture Diagram

Application Components

Event Trigger

Individual files are processed upon arrival using AWS S3 Events and Amazon Simple Notification Service (SNS). When an S3 object is created, an event is sent to an SNS topic. The event is then delivered to two separate SQS Queues representing distinct workflows.

Conversion Workflow

The Conversion Workflow is designed to process Markdown files stored in the UploadBucket. These files undergo conversion to HTML and are consequently stored in the designated OutputBucket. The intricate process is orchestrated through the ConversionQueue, an SQS queue capturing S3 Event JSON payloads. This ensures fine-grained control and robust error handling. Unprocessed messages find their way to the ConversionDLQ, a dead-letter queue, prompting a CloudWatch Alarm to alert stakeholders.

Sentiment Analysis Workflow

The Sentiment Analysis Workflow operates in parallel with the Conversion Workflow processing Markdown files from the UploadBucket. The workflow focuses on detecting the overall sentiment of each file and storing the results in the dedicated SentimentTable. The sentiment analysis is powered by Amazon Comprehend, a machine learning service adept at extracting insights from text. Similar to the Conversion Workflow, the Sentiment Workflow employs an SQS queue (SentimentQueue) and a dead-letter queue for error handling and alarming purposes.

Implementation

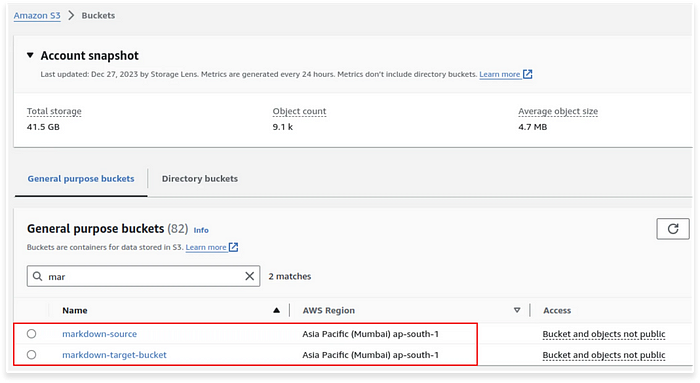

Step 1: Create S3 buckets

The first step is to create two S3 buckets.

The markdown-source bucket is designated for uploading the markdown files while markdown-target-bucket bucket is intended for storing the .html files after the markdown file goes conversion using the Lambda function.

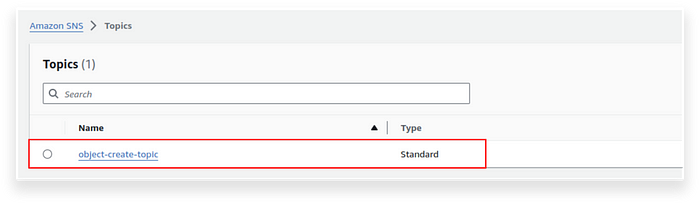

Step 2: Create an SNS Topic

SNS topics act as event messengers in the architecture. They notify services like Markdown conversion and sentiment analysis when new files appear.

Create a SNS topic as shown below.

Attach the access policy to your SNS topic that allows S3 to trigger SNS.

{

“Version”: “2012-10-17”,

“Id”: “example-ID”,

“Statement”: [

{

“Sid”: “Example SNS topic policy”,

“Effect”: “Allow”,

“Principal”: {

“Service”: “s3.amazonaws.com”

},

“Action”: “SNS:Publish”,

“Resource”: “arn:aws:sns:<AWS-REGION>:<YOUR-ACCOUNT-ID>:<YOUR-SNS-TOPIC>”,

“Condition”: {

“StringEquals”: {

“aws:SourceAccount”: “<YOUR-ACCOUNT-ID>”

},

“ArnLike”: {

“aws:SourceArn”: “arn:aws:s3:*:*:<SOURCE-BUCKET>”

}

}

}

]

}

SNS topic enables a fan-out pattern by broadcasting a single event message to multiple downstream services, two SQS queues in this case.

Step 3: Create SQS queues

SQS queue plays a crucial role in this architecture, acting as a shock absorber between the event stream from SNS and the processing power of the Lambda function. It acts as a buffer, smoothing out the flow of events and preventing the Lambda function from getting overloaded.

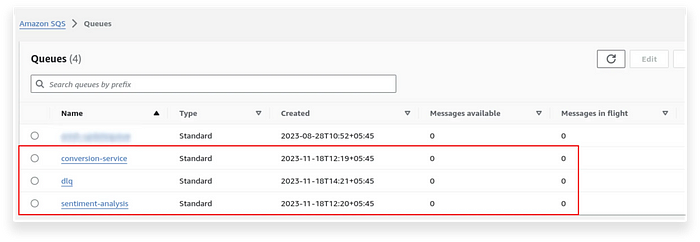

Create three SQS queues as shown below.

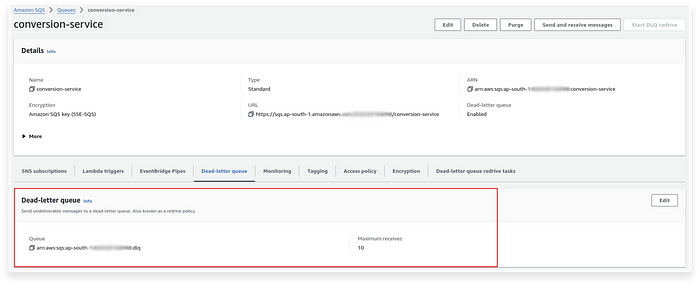

For conversion-service and sentiment-analysis, set dlq as Dead-letter-queue.

The figure below shows dlq being attached to conversion service.

Step 4: Create IAM Roles

For your Lambda function, you now need to create IAM roles. The role allows the Lambda function to communicate with the required services for file processing.

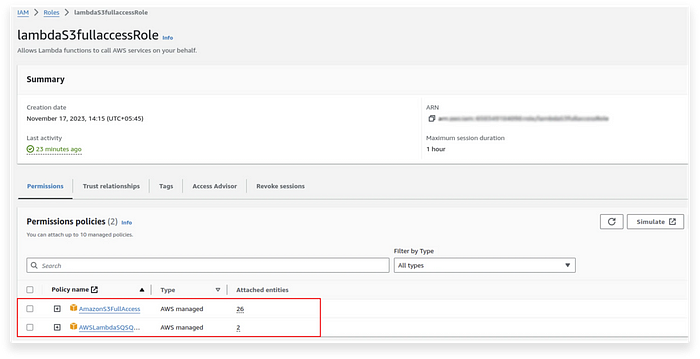

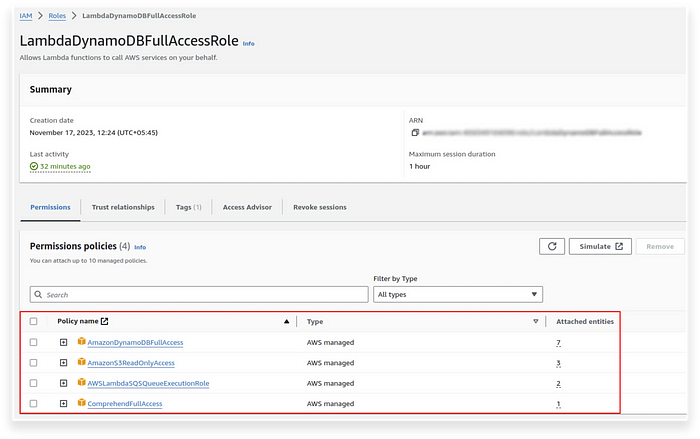

Two roles must be created specifically for attaching to two different Lambda functions.

First, create a role for html-conversion function and attach the policies as shown below.

Second, create another role for sentiment-analysis function and attach the policies as shown below.

Note: The name of the roles are little misleading here as I was not aware of the policies that would be required for each role.

Better naming could be applied here.

Step 5: Create Lambda functions

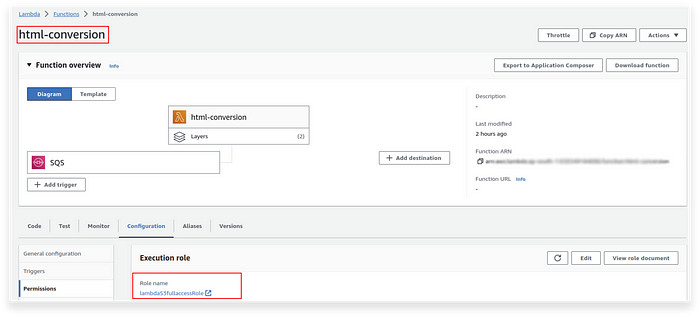

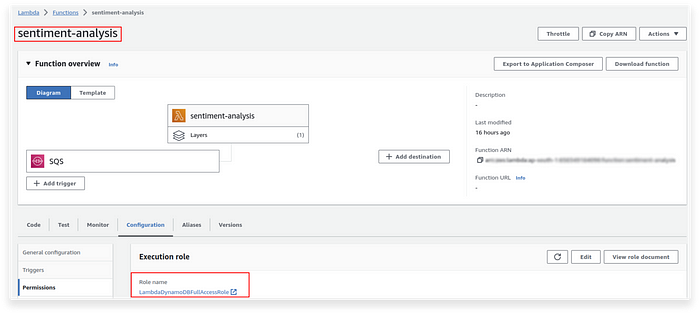

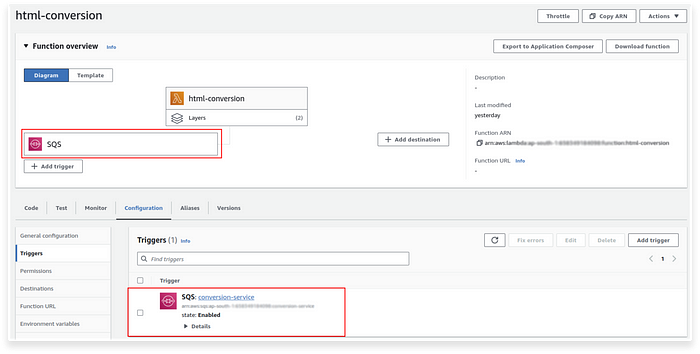

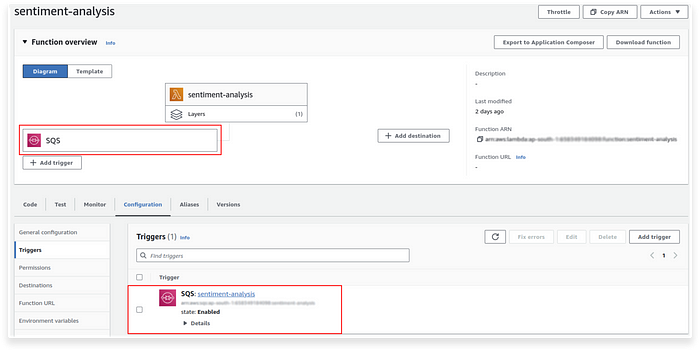

Create two Lambda functions named html-conversion and sentiment-analysis.

html-conversion function handles the conversion of Markdown to HTML and stores it in the S3 bucket. setiment-analysis function handles the calculation of sentiment using Amazon Comprehend and storing the results in a DynamoDB table.

Make sure you attach the respective execution roles to the Lambda function.

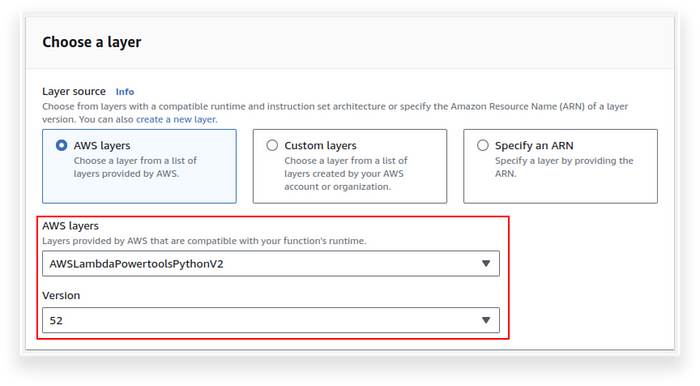

You also need to attach Lambda roles for the Lambda functions. Attach the AWSLambdaPowertoolsPythonV2 layer for both Lambda functions.

You also need to attach a custom layer for html-conversion function containing markdown package.

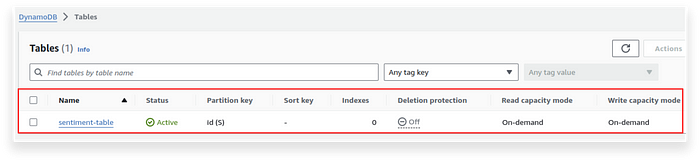

Step 6: Create a DyanamoDB table

In this architecture, DynamoDB serves as the permanent repository for sentiment analysis data. It securely stores and efficiently retrieves sentiment scores associated with each processed Markdown file, enabling downstream insights, reports, and visualizations if needed.

Use id as the Partition key for the table.

Step 7: Create event-driven signals

In this step, we delve into the intricacies of our architecture by adopting an event-driven approach. This step outlines how various AWS services are seamlessly interconnected through events, creating a responsive and scalable system.

Defining Event-Driven Processes

Our event-driven architecture leverages key AWS services to enable efficient communication and trigger actions based on events. Here’s a breakdown of how events are attached to their respective services:

1. Amazon S3 Event Trigger

Events are configured to trigger actions when objects are uploaded or modified in the designated S3 buckets.

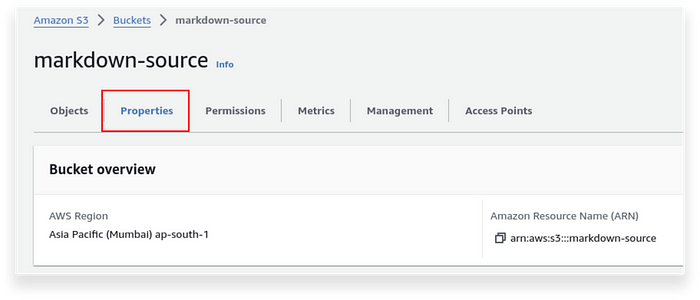

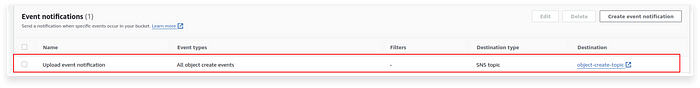

To attach an event notification to the S3 bucket, go to markdown-source and click on Properties.

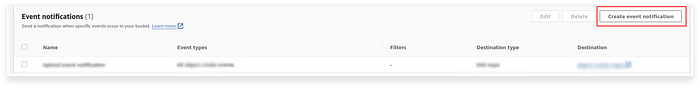

Scroll down and you should see the Event Notification section.

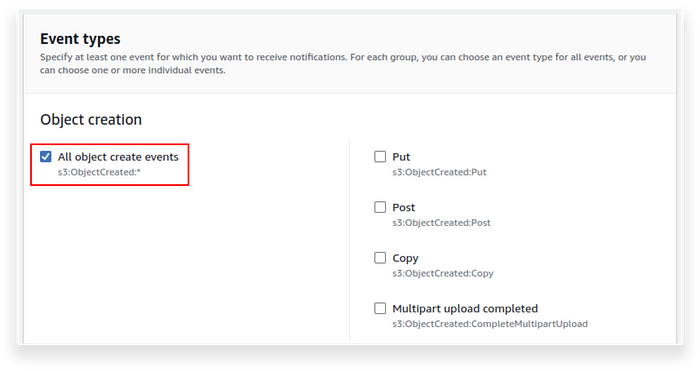

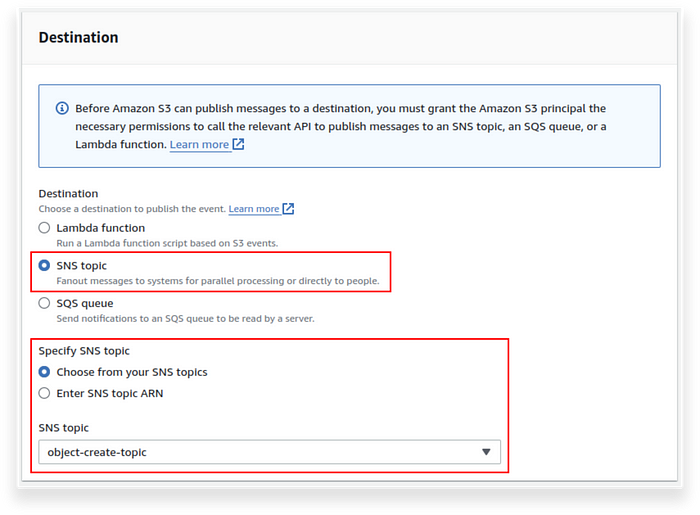

Click on the Create event notification button. Select the Event types and Destination as shown below.

You should see the following event notification once the event has been created.

- AWS Lambda Function Invocation

Attach the source as SQS for both Lambda functions as shown below.

3. Attaching SNS subscription

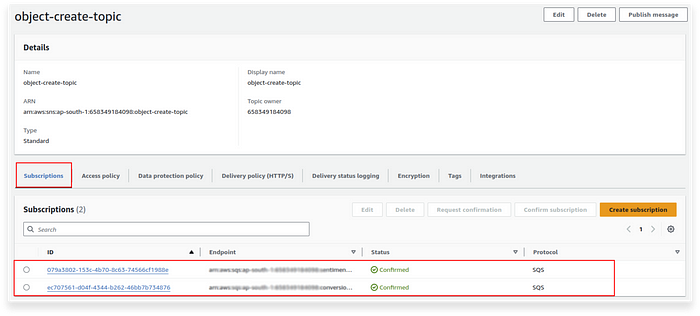

For the SQS queue to take in the event from SNS, the queue must subscribe to the SNS topic.

Create the subscriptions for both the queue in SNS subscriptions as shown below.

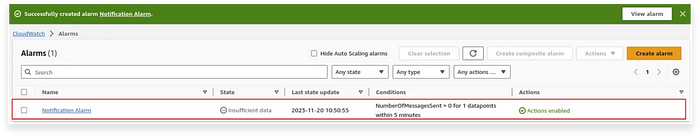

4. Creating a Cloudwatch alarm for notification

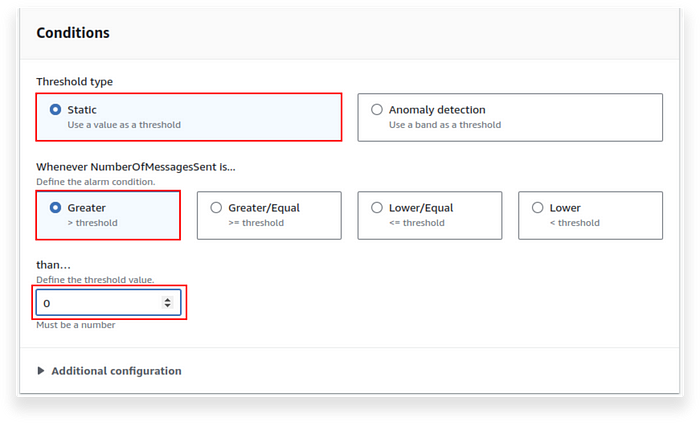

To send a notification in case the event cannot be processed, the event is passed into the Dead Letter Queue (DLQ). This needs to be notified through email using CloudWatch alarm and SNS topic.

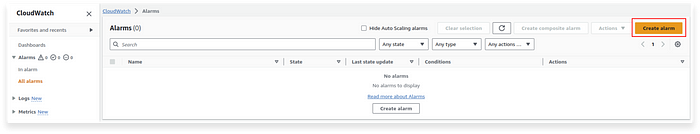

Go to CloudWatch and click on Create alarm.

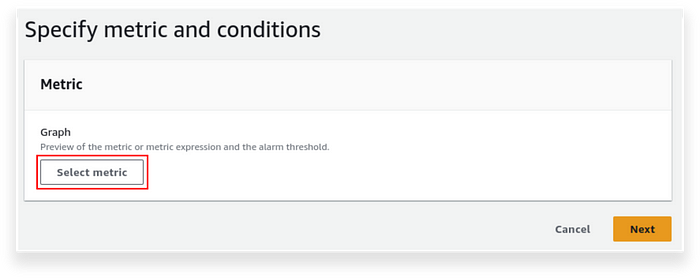

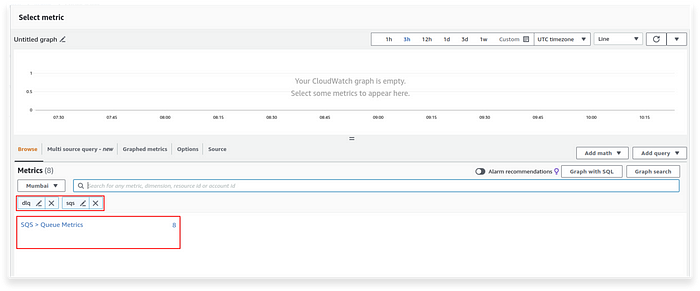

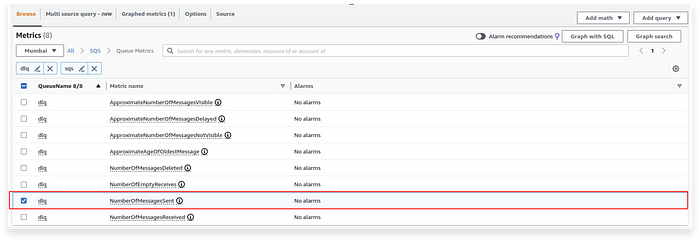

Go to Select metric and filter the metrics as shown below.

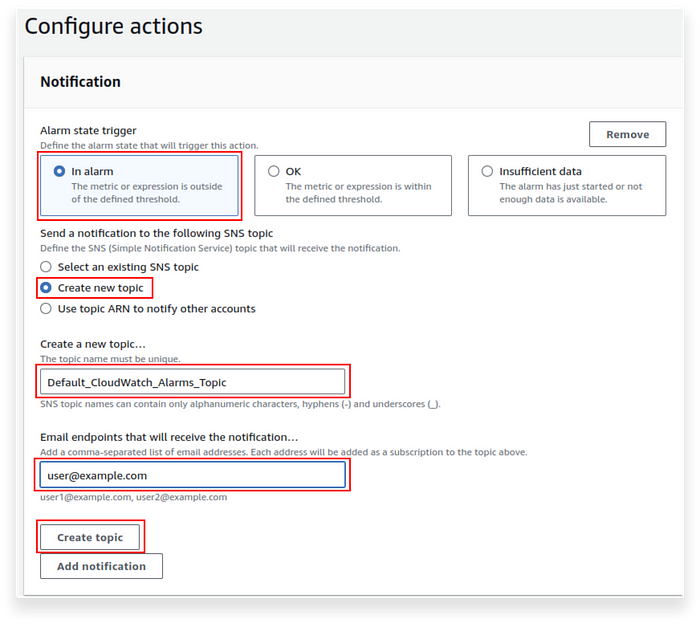

Now follow the steps shown below to create the topic for the alarm.

Configure the actions as shown below and click on Create topic.

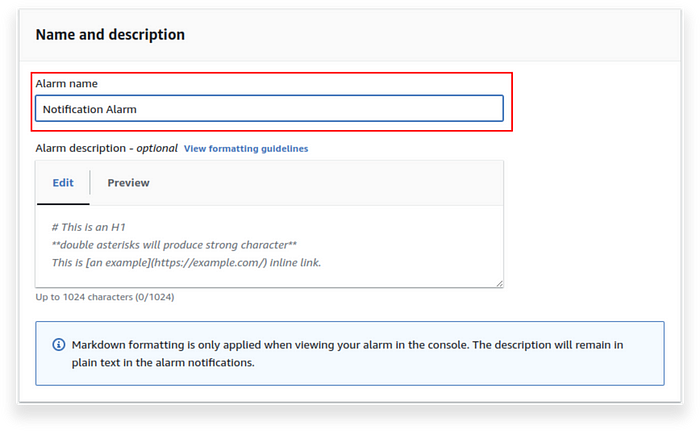

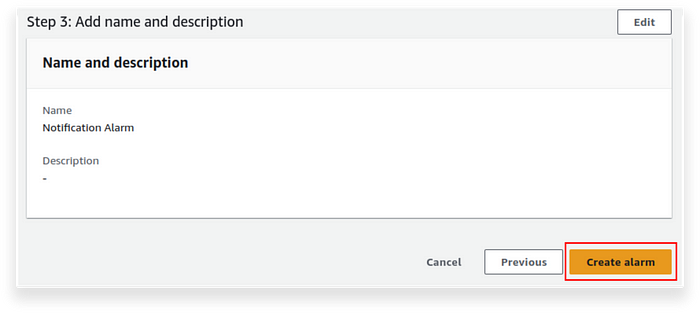

Now name your alarm as shown below:

Once the alarm is created, you should see the alarm as shown below.

Testing

Now, that’s all about the implementation. Let’s perform some testing to see if our architecture is working as per our expectations.

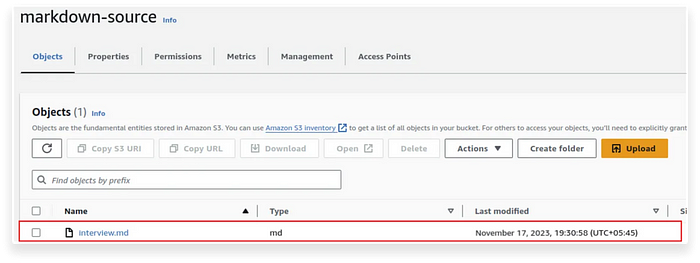

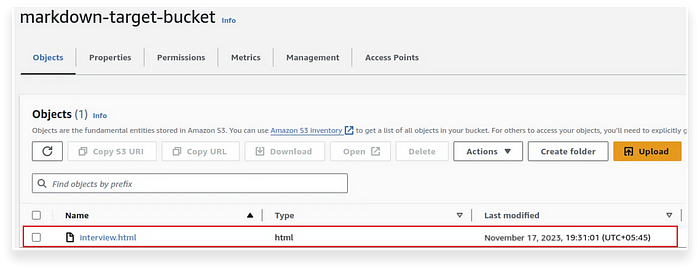

Copy the content from this link and create a file named interview.md. Upload this file to markdown-source bucket as follows.

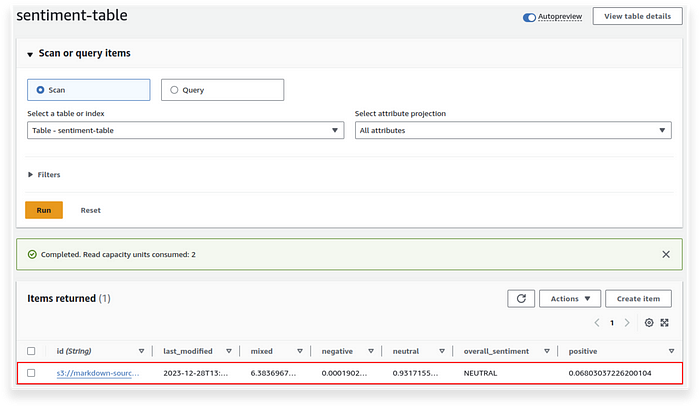

Wait for a few seconds and then check markdown-target-bucket and sentiment-table table. You should see the following items being created.

This shows that our file-processing architecture is working as expected.

Conclusion

In summary, the Serverless Real-time File Processing Architecture offers a robust solution for dynamic data processing. Utilizing AWS Lambda’s event-driven model, it excels in scenarios requiring real-time derivation of multiple data sets from a single object. The modular design, with dedicated workflows for conversion and sentiment analysis, ensures flexibility and scalability.

This architecture addresses practical data processing challenges and serves as an education guide for optimal serverless application design in an evolving technological landscape.

Finland

Finland Bangladesh

Bangladesh